Data Extraction

Content

What Is Data Extraction?

If the supply information uses a surrogate key, the warehouse must keep track of it even though it's never utilized in queries or reports; it's accomplished by making a lookup desk that incorporates the warehouse surrogate key and the originating key. This method, the dimension just isn't polluted with surrogates from numerous source techniques, while the flexibility to replace is preserved. Unique keys play an necessary part in all relational databases, as they tie every thing collectively. A unique key's a column that identifies a given entity, whereas a overseas secret is a column in another table that refers to a major key.

Data Extraction Defined

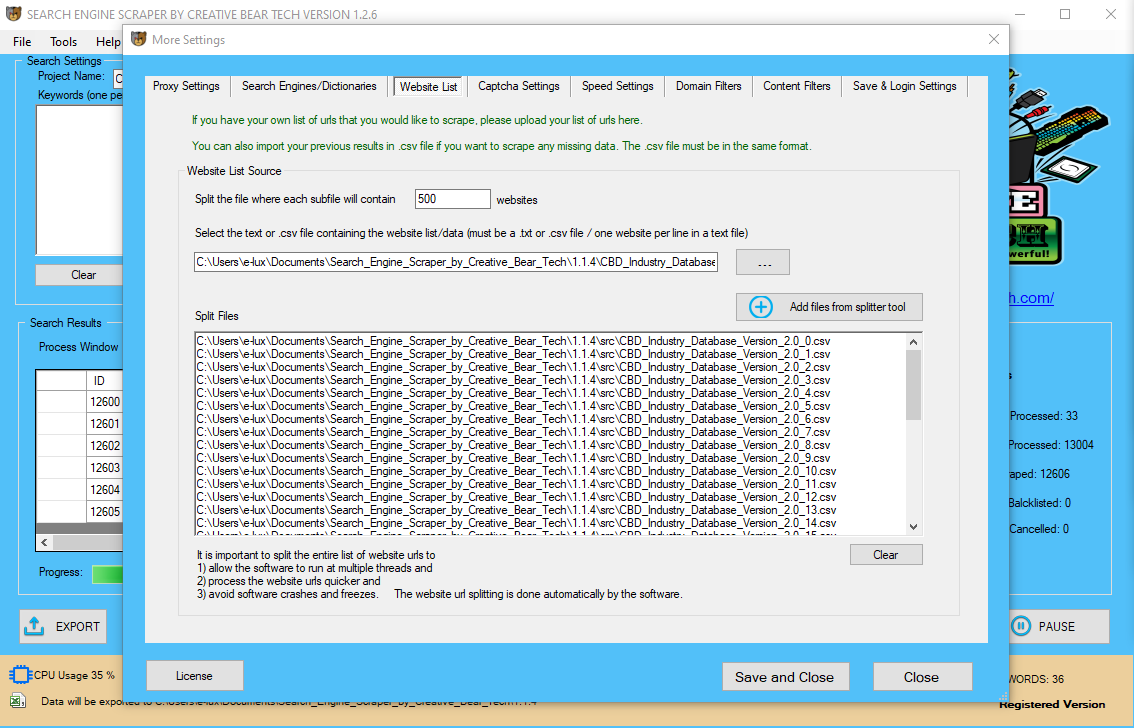

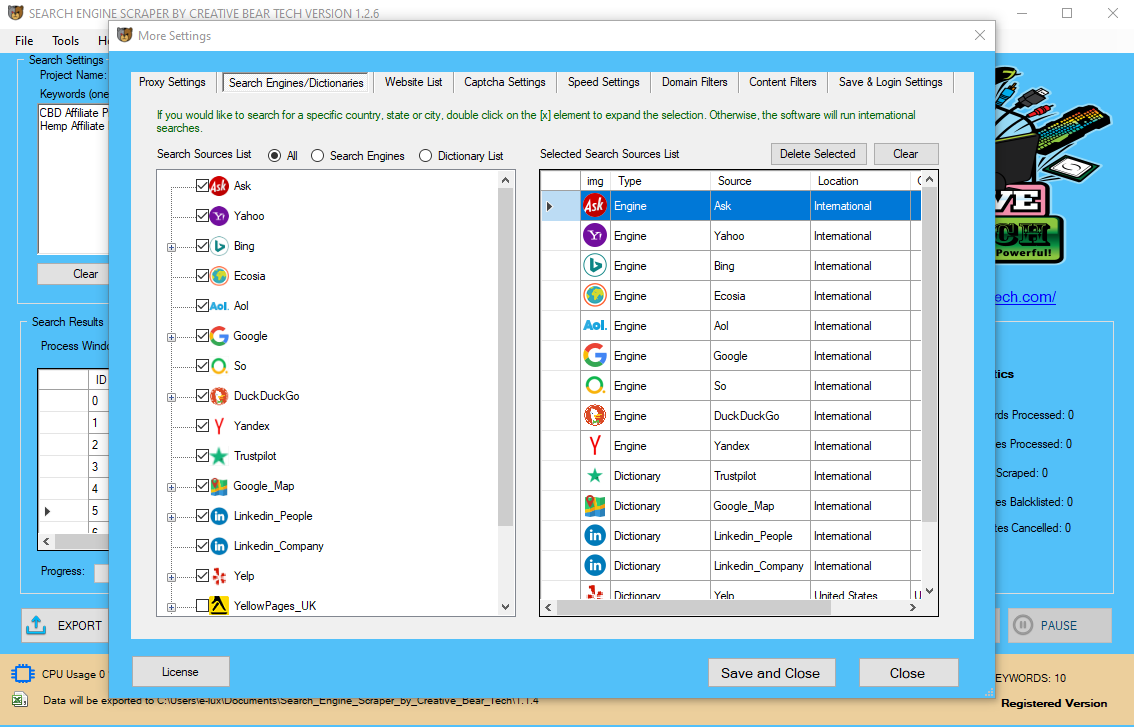

Grow your wholesale CBD sales with our Global Hemp and CBD Shop Database from Creative Bear Tech https://t.co/SQoxm6HHTU#cbd #hemp #cannabis #weed #vape #vaping #cbdoil #cbdgummies #seo #b2b pic.twitter.com/PQqvFEQmuQ

— Creative Bear Tech (@CreativeBearTec) October 21, 2019

Trigger-based mostly strategies have an effect on efficiency on the source methods, and this impact must be carefully considered previous to implementation on a production source system. If the timestamp info just isn't obtainable in an operational supply system, you will not all the time be able to modify the system to incorporate timestamps. Such modification would require, first, modifying the operational system’s tables to include a new timestamp column after which making a set off to replace the timestamp column following each operation that modifies a given row.

How Is Data Extracted?

An important consideration for extraction is incremental extraction, additionally called Change Data Capture. If a data warehouse extracts information from an operational system on a nightly foundation, then the data warehouse requires solely the data that has changed since the last extraction (that's, the information that has been modified in the past 24 hours). Additional information about the supply object is important for further processing.

Structured Data

Meaning that you simply wouldn't have to work on or manipulate the information on the supply before extracting it. The method you'd go about extracting data can change drastically relying on the source of the information.

You can find a quick list of critical appraisal instruments in the box to the best, but there are different instruments out there. Just bear in mind to make use of a device that's acceptable for the examine design or examine designs which have been included in your evaluation. ZE is the developer of ZEMA, a comprehensive platform for information aggregation, validation, modeling, automation, and integration. By offering unrivaled knowledge assortment, analytics, curve administration, and integration capabilities, ZEMA presents strong knowledge solutions for shoppers in all markets and industries. ZEMA is available on-premise, as a cloud answer via the award-winning ZE Cloud, Software as a Service, or Data-as-a-Service. To maintain things simple, we will take a look at two of the biggest categories for knowledge sources. Yet, as quickly because the construction of the webpages changed, they have to rewrite the code or even have to alter the entire method. refers to an automatic process to gather data that replaces the normal means of guide work of copy and pastes. It provides API for information, blogs, on-line discussions & critiques, and even the darkish internet. You can find tutorials on their websites to get you onboard shortly, and the educational process is smooth and simple. Its free model permits customers to construct 5 tasks at maximum and the data extracted can solely be retained for two weeks. If you extract a small quantity of information, the free version would be the most suitable choice for you. Even although it’s a chrome extension, it has a cloud scraper model that extracts information at any time.

You can find a quick list of critical appraisal instruments in the box to the best, but there are different instruments out there. Just bear in mind to make use of a device that's acceptable for the examine design or examine designs which have been included in your evaluation. ZE is the developer of ZEMA, a comprehensive platform for information aggregation, validation, modeling, automation, and integration. By offering unrivaled knowledge assortment, analytics, curve administration, and integration capabilities, ZEMA presents strong knowledge solutions for shoppers in all markets and industries. ZEMA is available on-premise, as a cloud answer via the award-winning ZE Cloud, Software as a Service, or Data-as-a-Service. To maintain things simple, we will take a look at two of the biggest categories for knowledge sources. Yet, as quickly because the construction of the webpages changed, they have to rewrite the code or even have to alter the entire method. refers to an automatic process to gather data that replaces the normal means of guide work of copy and pastes. It provides API for information, blogs, on-line discussions & critiques, and even the darkish internet. You can find tutorials on their websites to get you onboard shortly, and the educational process is smooth and simple. Its free model permits customers to construct 5 tasks at maximum and the data extracted can solely be retained for two weeks. If you extract a small quantity of information, the free version would be the most suitable choice for you. Even although it’s a chrome extension, it has a cloud scraper model that extracts information at any time.

- Such updating could range from adjusting the baseline risk (intercept or hazard) of the unique mannequin, to adjusting the predictor weights or regression coefficients, to adding new predictors or deleting existing predictors from the model ,,.

- Usually this second dataset is comparable to the first, for instance, in sufferers' medical and demographic traits, reflecting the target inhabitants of the mannequin growth research.

- Sometimes, nevertheless, it's of curiosity to look at whether a mannequin can even have predictive capacity in different scenarios.

- A essential point is that a validation research should evaluate the exact published model (method) derived from the initial knowledge.

For instance, a monetary establishment might need info on a customer in a number of departments and each department might need that buyer's information listed in a different way. The membership department might record the customer by name, whereas the accounting department would possibly record the client by quantity. ETL can bundle all of these data elements and consolidate them into a uniform presentation, such as for storing in a database or information warehouse. For example, you might be aiming to extract knowledge from the YellowPages website with an internet scraper. Thankfully, on this scenario, the info is already structured by enterprise name, business website, cellphone quantity and more predetermined information factors. Structured knowledge is normally already formatted in a means that fits the wants of your project. There are some ways to realize automation, both writing code by your self or hiring a freelancer to do the job for you. However, the most cost-efficient technique can be a SaaS to handle the method with an inexpensive time. First, we will use the base R capabilities to extract rows and columns from an information body. While performing data evaluation or engaged on Data Science initiatives, these instructions come in handy to extract data from a dataset.

Chillax Saturday: strawberry and mint fizzy bubble tea with Coconut CBD tincture from JustCBD @JustCbd https://t.co/s1tfvS5e9y#cbd #cbdoil #cbdlife #justcbd #hemp #bubbletea #tea #saturday #chillax #chillaxing #marijuana #cbdcommunity #cbdflowers #vape #vaping #ejuice pic.twitter.com/xGKdo7OsKd

— Creative Bear Tech (@CreativeBearTec) January 25, 2020

Once you resolve what knowledge you need to extract, and the evaluation you wish to perform on it, our knowledge specialists can eliminate the guesswork from the planning, execution, and upkeep of your data pipeline. It could, for instance, contain PII (personally identifiable information), or other data that is highly regulated. Another way that firms use ETL is to maneuver info to a different utility completely. For instance, the new application would possibly use one other database vendor and more than likely a really different database schema. ETL can be used to transform the data right into a format appropriate for the brand new software to make use of.

A chilled out evening at our head offices in Wapping with quality CBD coconut tinctures and CBD gummies from JustCBD @justcbdstore @justcbd @justcbd_wholesale https://t.co/s1tfvS5e9y#cbd #cannabinoid #hemp #london pic.twitter.com/LaEB7wM4Vg

— Creative Bear Tech (@CreativeBearTec) January 25, 2020

An intrinsic part of the extraction includes the parsing of extracted knowledge, leading to a check if the info meets an expected pattern or structure. You could must take away this sensitive data as a part of the extraction, and you will also need to move all your knowledge securely. For instance, you might wish to encrypt the info in transit as a safety measure. Removing the need for plenty of manual knowledge entry means your workers can spend more time on important tasks that solely a human can do. With on-line extractions, you should contemplate whether or not the distributed transactions are using original supply objects or ready supply objects. This influences the transportation method, and the need for cleaning and transforming the data.

ash your Hands and Stay Safe during Coronavirus (COVID-19) Pandemic - JustCBD https://t.co/XgTq2H2ag3 @JustCbd pic.twitter.com/4l99HPbq5y

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

Often occasions in information analysis, we want to get a sense of what number of full observations we now have. This could be useful in determining how we deal with observations with missing knowledge factors. At occasions, the information collection course of carried out by machines involves plenty of errors and inaccuracies in studying. Data manipulation can also be used to remove these inaccuracies and make information more correct and exact. Webhose.io is highly effective at getting information information feeds for news aggregators and social media monitoring websites like Hootsuite, Kantar Media, Mention, etc. The reporting of efficiency primarily based on thresholds chosen from the data itself can produce over-optimistic and biased performance . Given the strengths and weaknesses of varied modelling and predictor choice methods, the systematic review ought to record all information on the multivariable modelling, so readers can gain insight into how every model was developed. Prediction fashions for prognosis of venous thromboembolism in sufferers suspected of having the disease . Prognostic models for survival, for independence in actions of daily dwelling, and for getting residence, in sufferers with acute stroke . ETL techniques commonly integrate data from multiple purposes (techniques), sometimes developed and supported by different distributors or hosted on separate computer hardware. The separate techniques containing the original information are regularly managed and operated by totally different employees. For example, a cost accounting system may mix data from payroll, sales, and buying. Regardless of whether you select the Cochrane device or another RoB tool, ensure you are using avalidated important appraisal tool. In many instances, the primary secret is an auto-generated integer that has no that means for the enterprise entity being represented, but solely exists for the aim of the relational database - commonly known as a surrogate key. Data warehousing procedures usually subdivide a giant ETL course of into smaller pieces working sequentially or in parallel. To maintain monitor Free Email Extractor Software Download of data flows, it makes sense to tag every data row with "row_id", and tag each piece of the method with "run_id". In case of a failure, having these IDs help to roll again and rerun the failed piece. ETL processes can contain appreciable complexity, and vital operational problems can occur with improperly designed ETL methods. When you work with unstructured information, a big a part of your task is to arrange the info in such a means that it can be extracted. Most likely, you will retailer it in an information lake until you propose to extract it for evaluation or migration. You'll probably need to clear up "noise" out of your knowledge by doing issues like removing whitespace and symbols, removing duplicate results, and figuring out how to deal with missing values. Prognostic models for actions of every day dwelling, to be used within the early post-stroke section . Existing fashions for predicting the danger of having undiagnosed or developing (incident) type 2 diabetes in adults . If the primary key of the source knowledge is required for reporting, the dimension already contains that piece of information for every row.

Client dinner with some refreshing saffron lemonade with a few drops of JustCBD ???? ???? Oil Tincture! @JustCbd https://t.co/OmwwXXoFW2#cbd #food #foodie #hemp #drinks #dinner #finedining #cbdoil #restaurant #cuisine #foodblogger pic.twitter.com/Kq0XeG03IO

— Creative Bear Tech (@CreativeBearTec) January 29, 2020

Exercising and Running Outside during Covid-19 (Coronavirus) Lockdown with CBD Oil Tinctures https://t.co/ZcOGpdHQa0 @JustCbd pic.twitter.com/emZMsrbrCk

— Creative Bear Tech (@CreativeBearTec) May 14, 2020